Responsible AI & Tech for Good

I pursue applying AI for socially beneficial purposes in a responsible way.

I’ve been working on multiple “tech for good“ projects including AI AI-assisted communication for people with motor control and communication impairment and using AI for detecting harmful content on social media videos.

Over the past years, I have been advocating for AI Safety by following upcoming AI regulations, visiting conferences (e.g. AIES, London AI Governance Talks, AI Summit Talks, ICCV) and writing about them (see my blog).

I worked on several related projects, such as on leaking personal information with ORCAA (AI auditing company) and on the Twitter Bias Challenge in 2021. My understanding of the pervasive and often elusive issues which are inherent in everyday AI applications has further deepened during my Research & Engineering work in Trust & Safety at Unitary.

Selected Projects

-

Twitter Global Class Bias

My submission to the first Twitter Algorithmic Bias Challenge. I tested Twitter's image thumbnail cropping algorithm for images of cheap versus expensive objects, using the income labels as a proxy in the dataset of Dollar Street. I found that the algorithm is biased towards cheap rooms and spaces and expensive objects. See the report on the detailed findings.

-

Online Safety

At Unitary, I had the chance to gain more experience in industry R&D, people management, and managing data and evaluation pipelines from annotation to evaluation in the context of Trust & Safety. The data involved all modalities, from text, images, and videos, to audio and speech.

I have been working on developing, rigorously benchmarking and evaluating our models to detect harmful content in videos. I worked on cross-functional projects to organise data pipelines, annotation taxonomy and develop metrics to monitor ever-changing internal datasets in a way that supports model training and evaluation.

Our latest paper (which was featured in the 'Best of ICCV 2023’ magazine) was related to ML-assisted labelling, exploiting LLMs and image models for video classification. -

Responsible AI

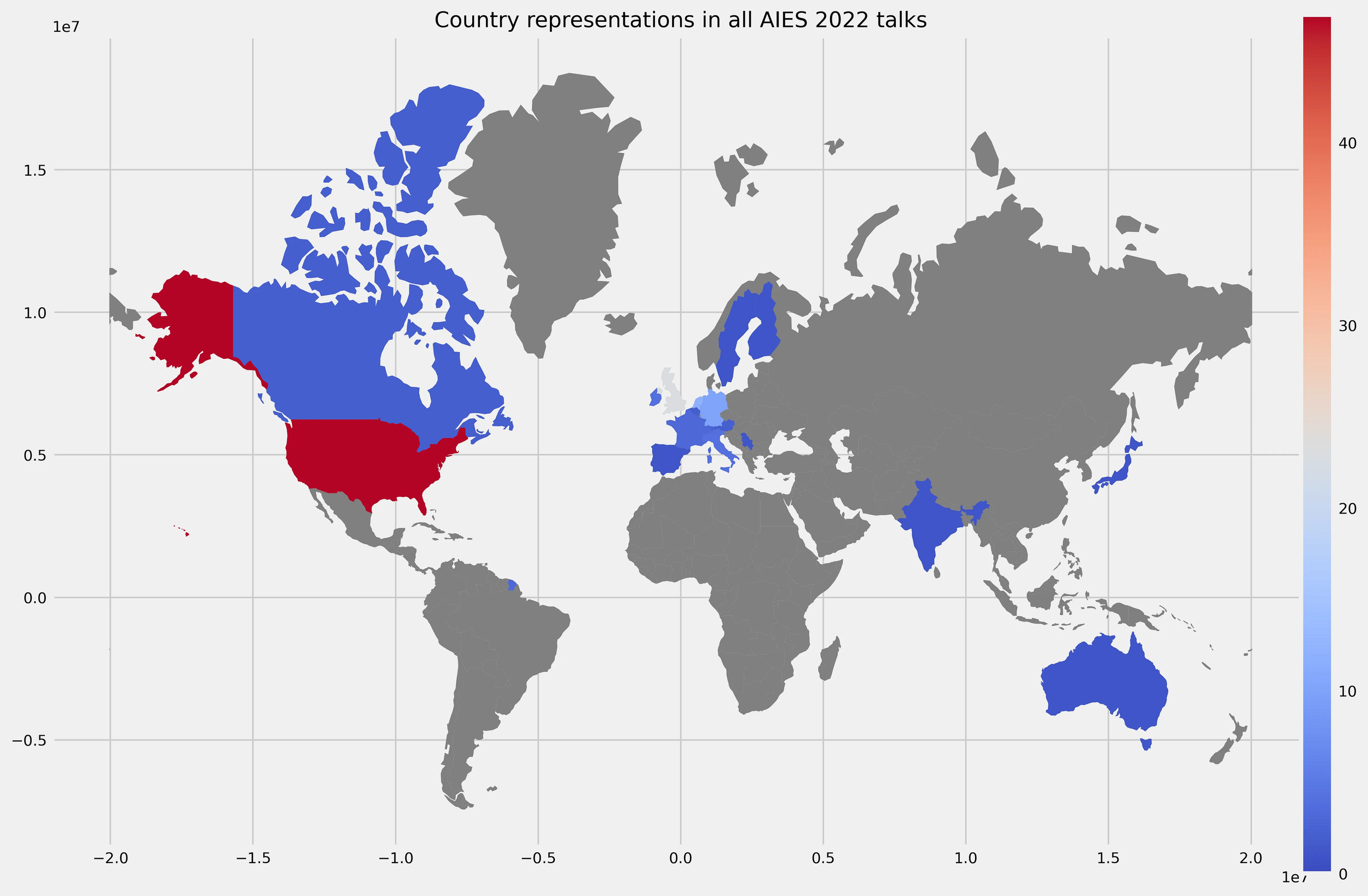

You can read my overview of the Artificial Intelligence, Ethics and Society 2022 conference themes and an analysis of the university/company and country representations at the conference, pursuing the question:

Are we biased while talking bias?For further content on Responsible AI see my blog.

-

AI-Assisted Communication

This award-winning project involved developing a wearable augmented reality tool assisting patients suffering from speech and motor control impairment. At its core, the tool included an ML model that generates text from pictorial series which they select using the tool. I organised researchers, developers, social workers, caretakers and patients. Besides organising the development and testing of the prototype, I developed the core generative model.

Awards won:

2014 - Award of the Challenge Handicap & Technologies 2014 - Reseau Telepresence system Nouvelles Technologies APF conference in Lille, France

2014 - Best Student Video Award at AAAI Video Competition -

Mixed Reality for Dementia Patients

With the German Research Center for Artificial Intelligence (DFKI) we worked on the Kognit project: Cognitive Models and Mixed Reality for Dementia Patients.

I developed image‑based representations of phrases towards a goal‑oriented, symbol‑based dialogue system. -

MediaWiki for European Robotic Surgery

I developed a MediaWiki collaborative content management system in the EuRoSurge (European Robotic Surgery) Project. It won the Third Prize at the National Conference of Student’s Scholarly Circles (OTDK) in 2013.